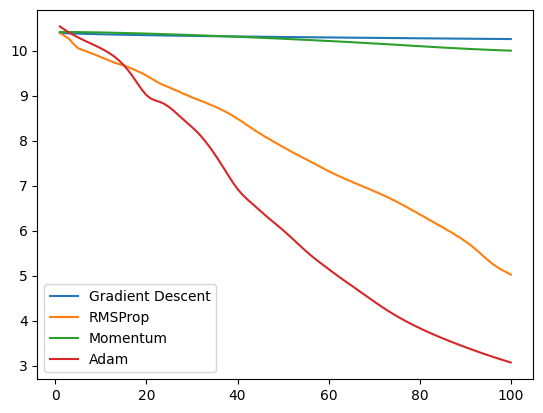

Hey Everyone! I developed neograd, a deep learning framework created from scratch using Python and NumPy. It supports automatic differentiation, many popular optimization algorithms like Adam, 2D, 3D Convolutions and MaxPooling layers all built from the ground up. It can also save and load models, parameters to and from disk. I initially built this to understand how automatic differentiation works under the hood in PyTorch, but later on extended it to a complete framework. I’m looking for feedback on what more features I can add and what can be improved. Please checkout the github repo at https://github.com/pranftw/neograd Thanks!

Colab Notebooks

https://colab.research.google.com/drive/1D4JgBwKgnNQ8Q5DpninB6rdFUidRbjwM?usp=sharing

https://colab.research.google.com/drive/184916aB5alIyM_xCa0qWnZAL35fDa43L?usp=sharing